S3 Object Locking

This feature requires Comet 23.3.1 or later.

Overview

S3 object locking support in Comet provides anti ransomware protection by protecting stored files from being overwritten or deleted. Comet implements the S3 Compliance mode object locking, which locks files for a specified retention period, during which the files are unalterable.

Data is protected if the client is compromised because the S3 vault will not allow the client to delete or alter an Object Locked file version until its retention period has expired.

For a quick visual overview, see our TLDR.

The AWS documentation has more details.

Object locking can be configured in two ways in comet: either via Storage Templates, or manually. Storage Templates are somewhat simpler, but currently are only implemented for AWS/S3 and Wasabi.

Configuring the S3 bucket

To use S3 Object Locking the Storage Bucket must support S3 Versioning and have S3 Versioning enabled. The AWS documentation has more details.

To enable S3 Versioning on Wasabi buckets follow the Wasabi documentation here.

AWS and Wasabi support two modes of object locking: Compliance and Governance. Comet works only with Compliance mode.

It is recommended to set no default object lock retention on the bucket. Comet will set retention on a per-object basis on important files (encryption key, data, snapshots) and no retention on transient files (locks, index).

When using Wasabi, a bucket with the correct settings will be created by the Comet Management Console if a non-existent bucket name is entered in a Storage Template.

Storage Templates

Storage Templates directly support object lock for AWS/S3 and Wasabi. Other S3 providers which are IAM compatible can use the Custom IAM-compatible (Object Lock) template. Please note that due to variance in IAM compliance we cannot guarantee every vendor's IAM compatible offering will work with the Custom IAM-compatible (Object Lock) template.

To use object lock with IDrive or other S3 compatible storage, you will need to configure object lock manually.

Storage Templates can be set up in the comet web interface at Settings / Roles / Authentication / Storage Templates.

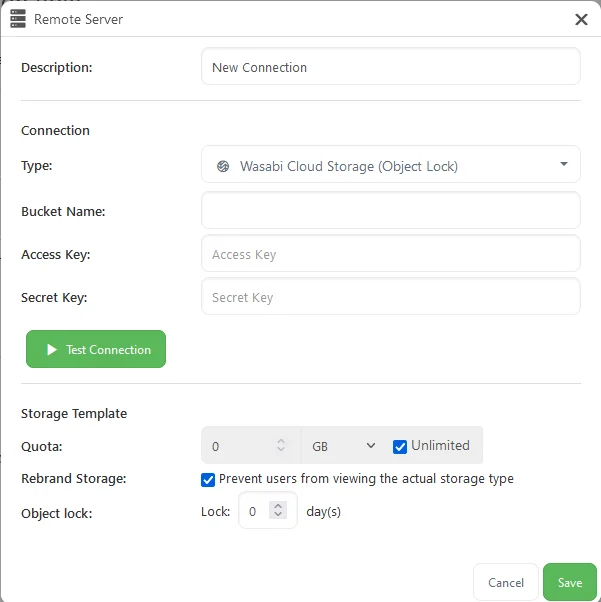

The storage template configuration allows you to set up a template for the creation of Storage Vaults. To use object locking in our template we need to:

- Select a template that supports Object Lock, which can be seen in the title of the template.

- Optionally, set a retention period in the Object Lock / Lock days field

- Optionally, deselect 'Prevent users from viewing the actual storage type'

Setting Object Lock enabled will mean that the S3 bucket created for the template will be created with object locking enabled (this can only be done when the bucket is created and is irreversible)

The retention period is the default number of days to lock objects stored in a vault. This can be set to zero, in which case no object retention will apply unless the retention time is edited in the created Storage Vault.

If we deselect the option to prevent viewing the actual storage type, then the user will be able to edit the retention period of Storage Vaults created using the template.

Once the storage template is created, a user can use it to create a Storage Vault which will have object lock enabled.

Manually Set up Object Lock in Storage Vault

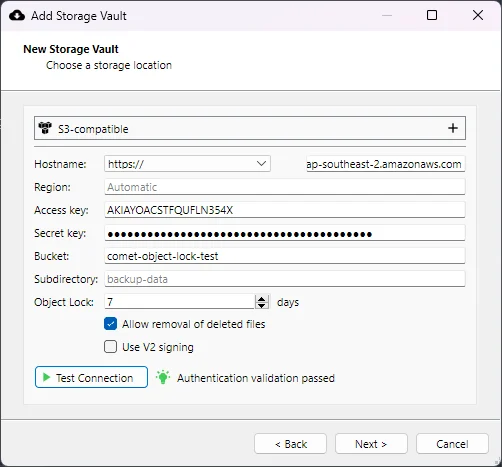

Object locking can be manually configured when setting up a Storage Vault using AWS/S3, Wasabi, IDrive, Impossible Cloud or compatible S3 storage. When creating a storage vault using these storage providers, the option to enable object locking will be available.

To utilise object locking in this way, you must first create a bucket with object lock enabled in your chosen S3 Storage Provider.

If Object Lock days is set to a value 1 or greater, then object locking will be enabled for the Storage Vault, using the specified retention period. If the vault is created without object locking, it cannot be added at a later date, nor can it be turned off once the bucket is created.

The allow removal of deleted files option allows cleaning up of files marked for deletion in an S3 bucket with versioning enabled (see below)

Allow Removal of Deleted Files

When S3 storage provides object locking, it enables file versioning at the same time. (for more information about how this is implemented see the AWS versioning docs documentation)

When versioning is enabled, a new version is created whenever a user wants to alter an existing file. The new file has the same name but a later version number. When a user wants to 'delete' a versioned file, the original version is not removed from storage, but a delete marker is created for the file with the same file name and a later version number (a delete marker is implemented as a zero byte file with metadata designating it as a delete marker).

When an S3 bucket with versioning is listed normally, the storage provider shows only the most recent version of the contents - or in the case of delete markers - appear as there is no file there at all. However, the original file version still exists but with a different version number, and can still be retrieved. A file in S3 storage may have many different versions.

A consequence of this behaviour is that old unneeded backups will accumulate and take up increasing space over time.

When the allow removal of deleted files option is selected on a Storage Vault, Comet will remove the delete markers and all underlying versions of files with delete markers on them but ONLY those which are out of their object locking retention period (S3 storage will not allow removal of these files when they are within their object lock retention period). This cleanup occurs during the normal Comet retention pass phase.

Note that this feature is independent of the object lock feature - it can be enabled for any S3 bucket with versioning enabled. Obviously, in that context, consideration needs to be given to the data safety consequences of automatically removing earlier versions of your data.

Retrieval of 'Deleted' Files

If someone tries to delete all of your files - either maliciously or by mistake - then object locking will ensure that files within their retention period cannot be removed. However, versioning means that a "soft delete" is always possible - ie: the original file version remains but a new delete marker is written for the file with a new version number, making it look as if the file no longer exists. It is recommended that a reindex is performed on the Storage Vault after restoring deleted files.

So what do you do if this occurs? There are a few options for recovery, depending on which storage provider you're using and which tools you prefer to use. Here are a few:

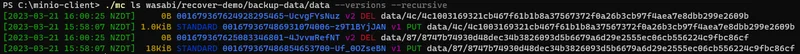

Bash / Minio Client

Here's a bash script which works with the minio command line client. It removes ALL delete markers in the specified directory, so any soft deleted files will be recovered. This does not work for Wasabi but has been tested on AWS/S3 and Minio. This script requires you have set up an alias for your storage provider using mc, and it assumes that you're running in the directory where mc is installed.

#!/bin/bash

# MC Alias and path where delete markers should be removed from.

# NB: do not include a trailing slash

DIR="s3/recover-test/prefix/data"

# List files in the named directory which are delete markers ("DEL") then remove each.

# Add '--dry-run' just after 'rm' in the command to try out the action without making any changes (a

# successful dry run will raise no errors)

./mc ls $DIR --versions --recursive | grep DEL | awk -v dir=$DIR '{print "rm --version-id " $5, dir "/" $8}' | xargs -L 1 ./mc

Wasabi Options

The Wasabi knowledge base lists two ways to remove delete markers from files. The article is here. It presents a Python script for file recovery, which might be preferrable for organisations which use Python. It also refers to s3undelete which is a standalone executable for removing delete markers. S3undelete runs on Windows and Mac.

Video tutorial

Watch this quick video on how to set up Object Locked storage with Comet.

TLDR

To summarise, in Comet, object lock and allow removal of deleted files work like so:

- Comet backups are made up of snapshots which consist of data chunks:

- Chunks represent pieces of files. If these remain the same between backups, Comet will re-reference the same chunk:

- When it's time to delete old backup jobs, Comet only needs to delete chunks which are no longer in use by any snapshot:

- Of course, if Comet can delete your data, then Malware might be able to as well!

- Fortunately, S3 Object Lock can completely shield a file from being deleted:

- But how does that work with Comet retention passes? Comet needs to able to remove old outdated backup jobs at some point.

- Comet with Object Lock gets around this by applying a delete marker to the data, or 'tombstoning' it. So instead of really deleting it we just make it look like it's deleted:

- So, we start out by using Object Lock on all the different files. When a retention pass wants to delete a snapshot, we tombstone it instead:

- Eventually, the Object Lock shield expires but Comet keeps it up to date for the non-tombstoned files:

- ...and once the Object Lock has expired, Comet is free to actually delete these unneeded files properly, freeing up the space on S3 storage: