Comet chunking overview

Overview

At the core of Comet is our technology that allows us to back-up and restore faster than the competition: this is called "Chunking".

Backup algorithm

Comet backs up data by first splitting it into variable-sized chunks, which are individually compressed, encrypted, and uploaded. Comet uses data-dependent chunking, efficiently splitting a file into consistent chunks even in the face of random inserts.

A backup job consists of a list of files and which chunks would be needed to reconstruct them. Any successive incremental backup jobs simply realize that chunks already exist and do not need to be re-uploaded.

This chunking technique has the following properties:

- Both the oldest and the most recent backup jobs can be restored with the same speed

- Duplicate data does not require any additional storage, since the chunks are the same ("deduplication")

- There is never any need to re-upload the full file, regardless of the number of backup jobs

- There is no need to be trusted to decrypt data.

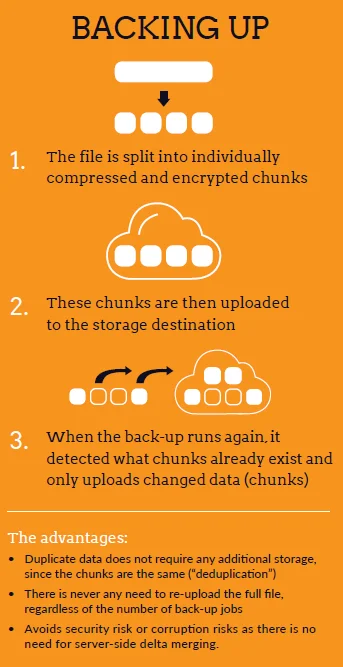

Backing up

Comet backs up data by first splitting it into variable-sized chunks, which are individually compressed, encrypted, and uploaded. Comet uses data-dependent chunking, efficiently splitting a file into consistent chunks even in the face of random inserts.

Further incremental back-up jobs simply realize that chunks already exist and do not need to be re-uploaded.

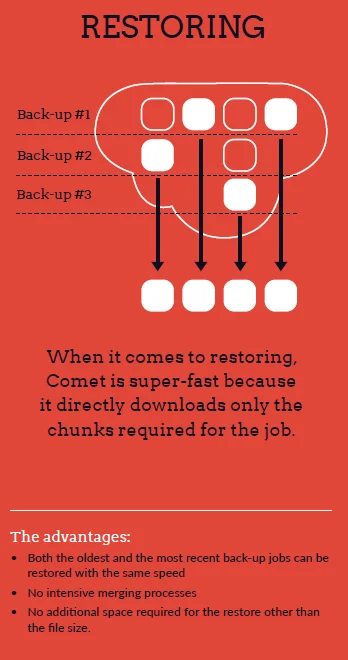

Restoring

When it comes to restoring, Comet is just as fast. Comet directly downloads only the chunks it needs for the file and requires no additional space other than the size of the file and has no CPU intensive merging processes.